Abstract

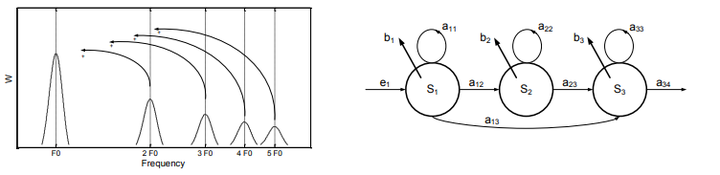

The automatic transcription of music recordings with the objective to derive a score-like representation from a given audio representation is a fundamental and challenging task. In particular for polyphonic music recordings with overlapping sound sources, current transcription systems still have problems to accurately extract the parameters of individual notes specified by pitch, onset, and duration. In this article, we present a music transcription system that is carefully designed to cope with various facets of music. One main idea of our approach is to consistently employ a mid-level representation that is based on a musically meaningful pitch scale. To achieve the necessary spectral and temporal resolution, we use a multi-resolution Fourier transform enhanced by an instantaneous frequency estimation. Subsequently, having extracted pitch and note onset information from this representation, we employ Hidden Markov Models (HMM) for determining the note events in a context-sensitive fashion. As another contribution, we evaluate our transcription system on an extensive dataset containing audio recordings of various genre. Here, opposed to many previous approaches, we do not only rely on synthetic audio material, but evaluate our system on real audio recordings using MIDI-audio synchronization techniques to automatically generate reference annotations.